A security researcher was awarded a bug bounty of $107,500 for identifying security issues in Google Home smart speakers that could be exploited to install backdoors and turn them into wiretapping devices.

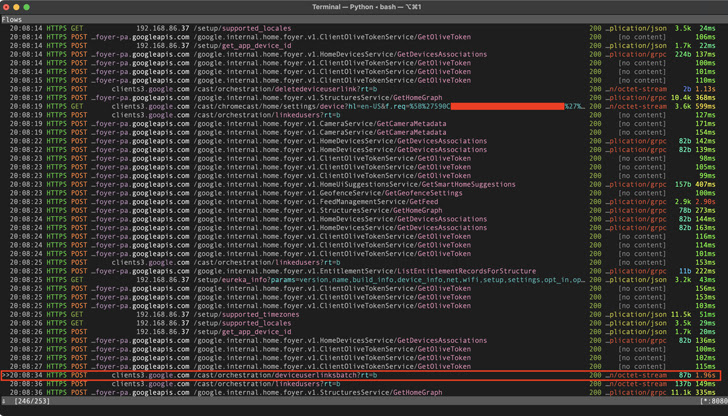

The flaws "allowed an attacker within wireless proximity to install a 'backdoor' account on the device, enabling them to send commands to it remotely over the internet, access its microphone feed, and make arbitrary HTTP requests within the victim's LAN," the researcher, who goes by the name Matt, disclosed in a technical write-up published this week.

In making such malicious requests, not only could the Wi-Fi password get exposed, but also provide the adversary direct access to other devices connected to the same network. Following responsible disclosure on January 8, 2021, the issues were remediated by Google in April 2021.

The problem, in a nutshell, has to do with how the Google Home software architecture can be leveraged to add a rogue Google user account to a target's home automation device.

In an attack chain detailed by the researcher, a threat actor looking to eavesdrop on a victim can trick the individual into installing a malicious Android app, which, upon detecting a Google Home device on the network, issues stealthy HTTP requests to link an attacker's account to the victim's device.

Taking things a notch higher, it also emerged that, by staging a Wi-Fi deauthentication attack to force a Google Home device to disconnect from the network, the appliance can be made to enter a "setup mode" and create its own open Wi-Fi network.

The threat actor can subsequently connect to the device's setup network and request details like device name, cloud_device_id, and certificate, and use them to link their account to the device.

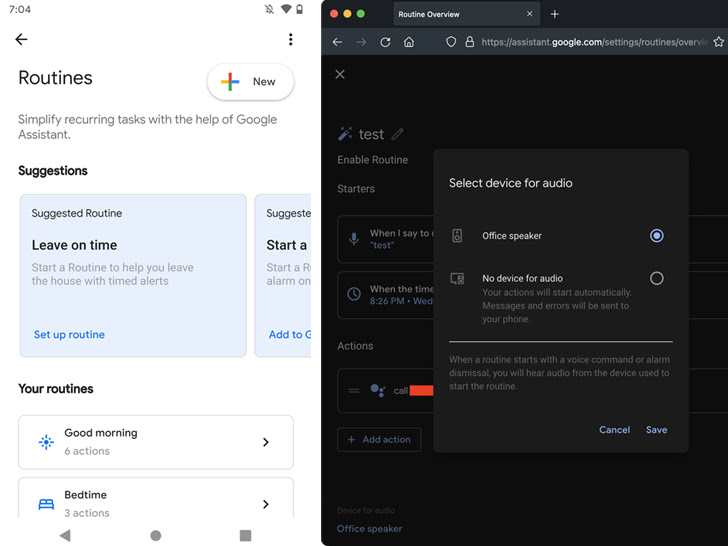

Regardless of the attack sequence employed, a successful link process enables the adversary to take advantage of Google Home routines to turn down the volume to zero and call a specific phone number at any given point in time to spy on the victim through the device's microphone.

"The only thing the victim may notice is that the device's LEDs turn solid blue, but they'd probably just assume it's updating the firmware or something," Matt said. "During a call, the LEDs do not pulse like they normally do when the device is listening, so there is no indication that the microphone is open."

Furthermore, the attack can be extended to make arbitrary HTTP requests within the victim's network and even read files or introduce malicious modifications on the linked device that would get applied after a reboot.

This is not the first time such attack methods have been devised to covertly snoop on potential targets through voice-activated devices.

In November 2019, a group of academics disclosed a technique called Light Commands, which refers to a vulnerability of MEMS microphones that permits attackers to remotely inject inaudible and invisible commands into popular voice assistants like Google Assistant, Amazon Alexa, Facebook Portal, and Apple Siri using light.

Found this article interesting? Follow us on Twitter and LinkedIn to read more exclusive content we post.

.png)

1 year ago

209

1 year ago

209

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·