BOOK THIS SPACE FOR AD

ARTICLE ADAs artificial intelligence (AI) continues to advance, so does its potential for misuse, with deepfakes emerging as a formidable threat. Deepfakes leverage sophisticated AI algorithms to create hyper-realistic, deceptive content that can manipulate perceptions and spread misinformation. This article delves into the dangers posed by deepfakes, the implications for individuals and society, and strategies for securing against this rising tide of AI-generated deception.

The Deepfake Phenomenon Unmasking AI-Driven DeceptionProvide a concise definition of deepfakes and their origins.Highlight notable instances of deepfake misuse and the impact on public perception.2. AI’s Deceptive Artistry How Deepfakes are Created

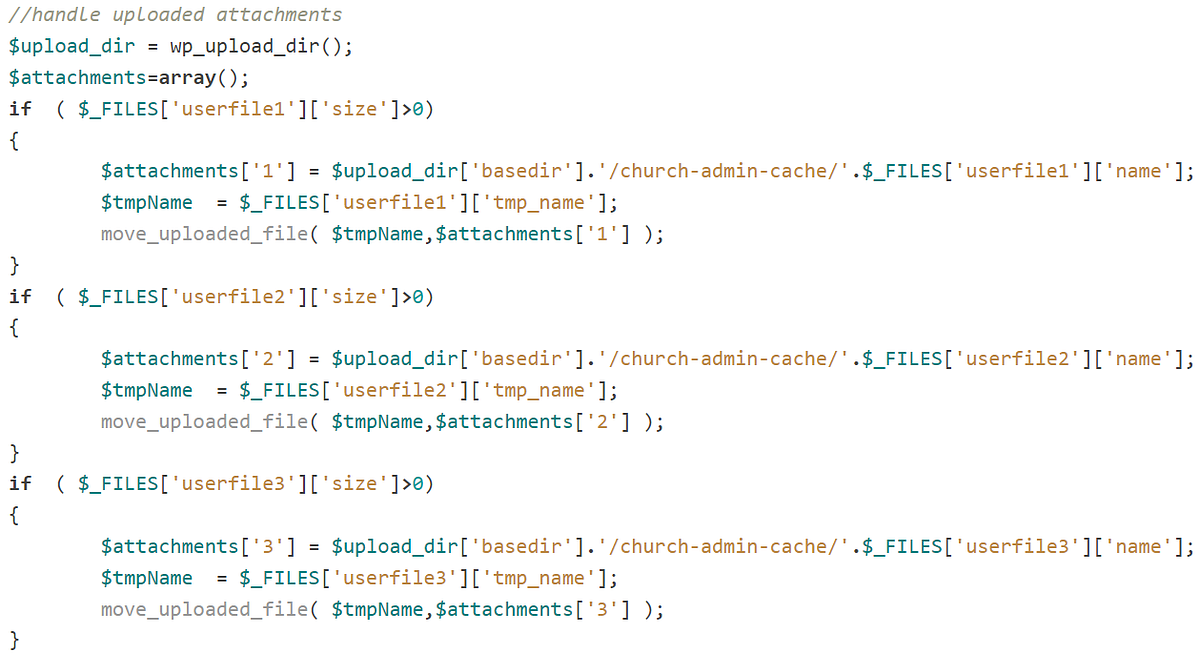

Explain the underlying technology behind deepfakes, including generative adversarial networks (GANs) and deep learning.Illustrate the process of creating convincing deepfake content, such as manipulated videos and audio recordings.3. The Ripple Effect Deepfake Dangers in the Digital Age

Explore the potential consequences of deepfake proliferation, including misinformation, identity theft, and reputational damage.Discuss the broader societal impact of deepfakes on trust and authenticity.4. Deepfake Targets Individuals, Organizations, and Democracy

Examine the varied targets of deepfake attacks, from high-profile individuals to corporations and political entities.Discuss the challenges posed by deepfakes in the context of democratic processes and public discourse.5. Beyond Faceswaps The Evolution of Deepfake Technology

Highlight the expanding capabilities of deepfake technology, including voice cloning and the creation of entirely synthetic personas.Discuss the challenges in detecting more advanced forms of AI-generated deception.6. Fighting Fire with Fire AI-Based Deepfake Detection

Explore the ongoing efforts to develop AI-driven tools for detecting and mitigating deepfakes..png)

4 months ago

34

4 months ago

34

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·