BOOK THIS SPACE FOR AD

ARTICLE AD

The FBI warns that scammers are increasingly using artificial intelligence to improve the quality and effectiveness of their online fraud schemes, ranging from romance and investment scams to job hiring schemes.

"The FBI is warning the public that criminals exploit generative artificial intelligence (AI) to commit fraud on a larger scale which increases the believability of their schemes," reads the PSA.

"Generative AI reduces the time and effort criminals must expend to deceive their targets."

The PSA presents several examples of AI-assisted fraud campaigns and many topics and lures commonly used to help raise awareness.

The agency has also shared advice on identifying and defending against those scams.

Common schemes

Generative AI tools are perfectly legal aids to help people generate content. However, they can be abused to facilitate crimes like fraud and extortion, warns the FBI.

This potentially malicious activity includes text, images, audio, voice cloning, and videos.

Some of the common schemes the agency has uncovered lately concern the following:

Use of AI-generated text, images, and videos to create realistic social media profiles for social engineering, spear phishing, romance scams, and investment fraud schemes. Using AI-generated videos, images, and text to impersonate law enforcement, executives, or other authority figures in real-time communications to solicit payments or information. AI-generated text, images, and videos are used in promotional materials and websites to attract victims into fraudulent investment schemes, including cryptocurrency fraud. Creating fake pornographic images or videos of victims or public figures to extort money. Generating realistic images or videos of natural disasters or conflicts to solicit donations for fake charities.Artificial intelligence has been widely used for over a year to create cryptocurrency scams containing deepfake videos of popular celebrities like Elon Musk.

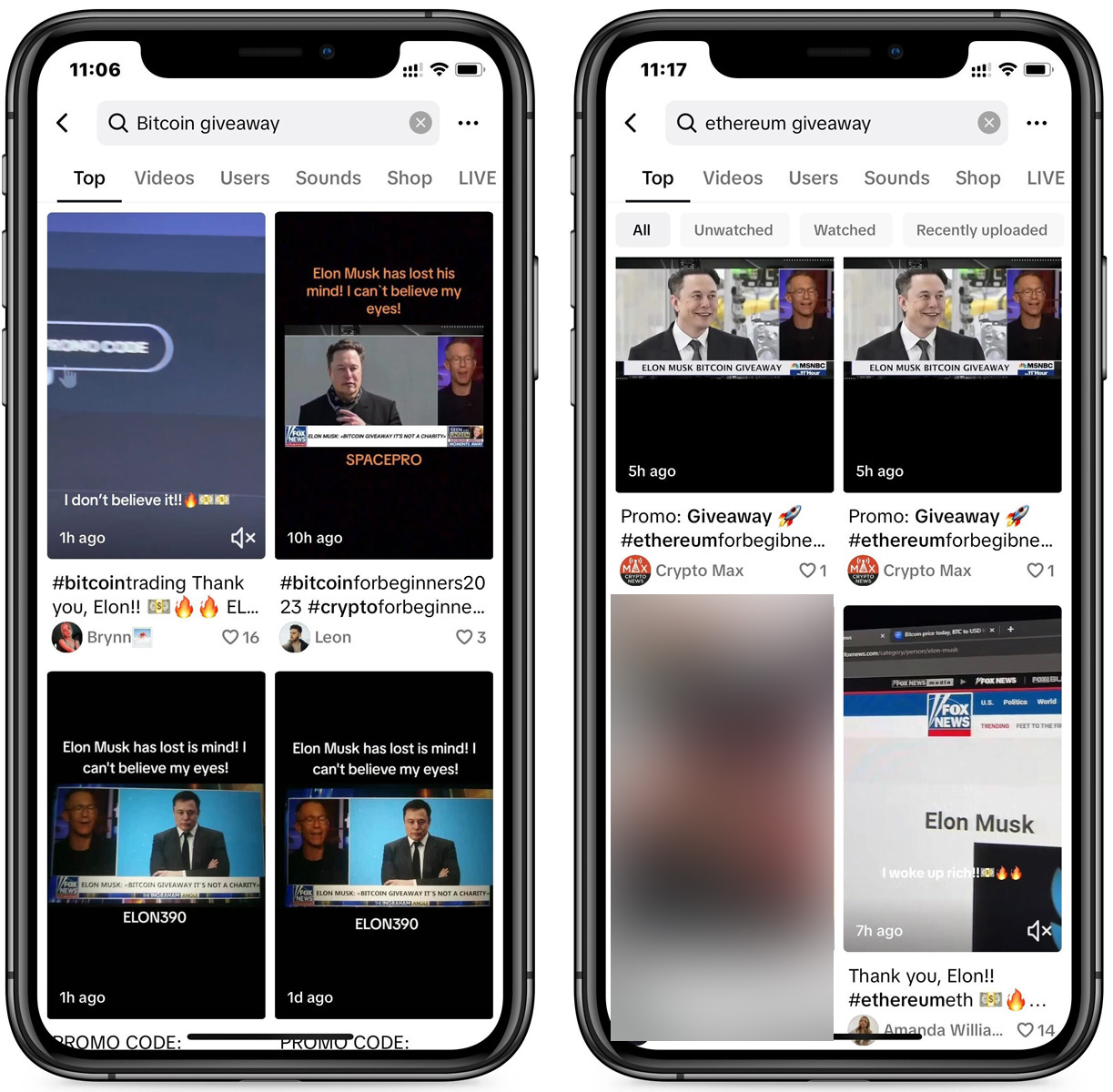

Deepfake crypto scams on TikTok

Deepfake crypto scams on TikTokSource: BleepingComputer

More recently, Google Mandiant reported that North Korean IT workers have been using artificial intelligence to create personas and images to appear as non-North Korean nationals to gain employment with organizations worldwide.

Once hired, these individuals are used to generate revenue for the North Korean regime, conduct cyber espionage, or even attempt to deploy information-stealing malware on corporate networks.

The FBI's advice

Although generative AI tools can increase the believability of fraud schemes to a level that makes it very hard to discern from reality, the FBI still proposes some measures that can help in most situations.

These are summarized as follows:

Create a secret word or phrase with family to verify identity. Look for subtle imperfections in images/videos (e.g., distorted hands, irregular faces, odd shadows, or unrealistic movements). Listen for unnatural tone or word choice in calls to detect AI-generated vocal cloning. Limit public content of your image/voice; set social media accounts to private and restrict followers to trusted people. Verify callers by hanging up, researching their claimed organization, and calling back using an official number. Never share sensitive information with strangers online or over the phone. Avoid sending money, gift cards, or cryptocurrency to unverified individuals.If you suspect that you're contacted by scammers or fallen victim to a fraud scheme, you are recommended to report it to IC3.

When submitting your report, include all information about the person who approached you, financial transactions, and interaction details.

.png)

Bengali (Bangladesh) ·

Bengali (Bangladesh) ·  English (United States) ·

English (United States) ·